k9s EKS Context Error

First off, if you use Kubernetes and haven’t used k9s yet,

you’re missing out. I consider it the most essential tool after kubectl.

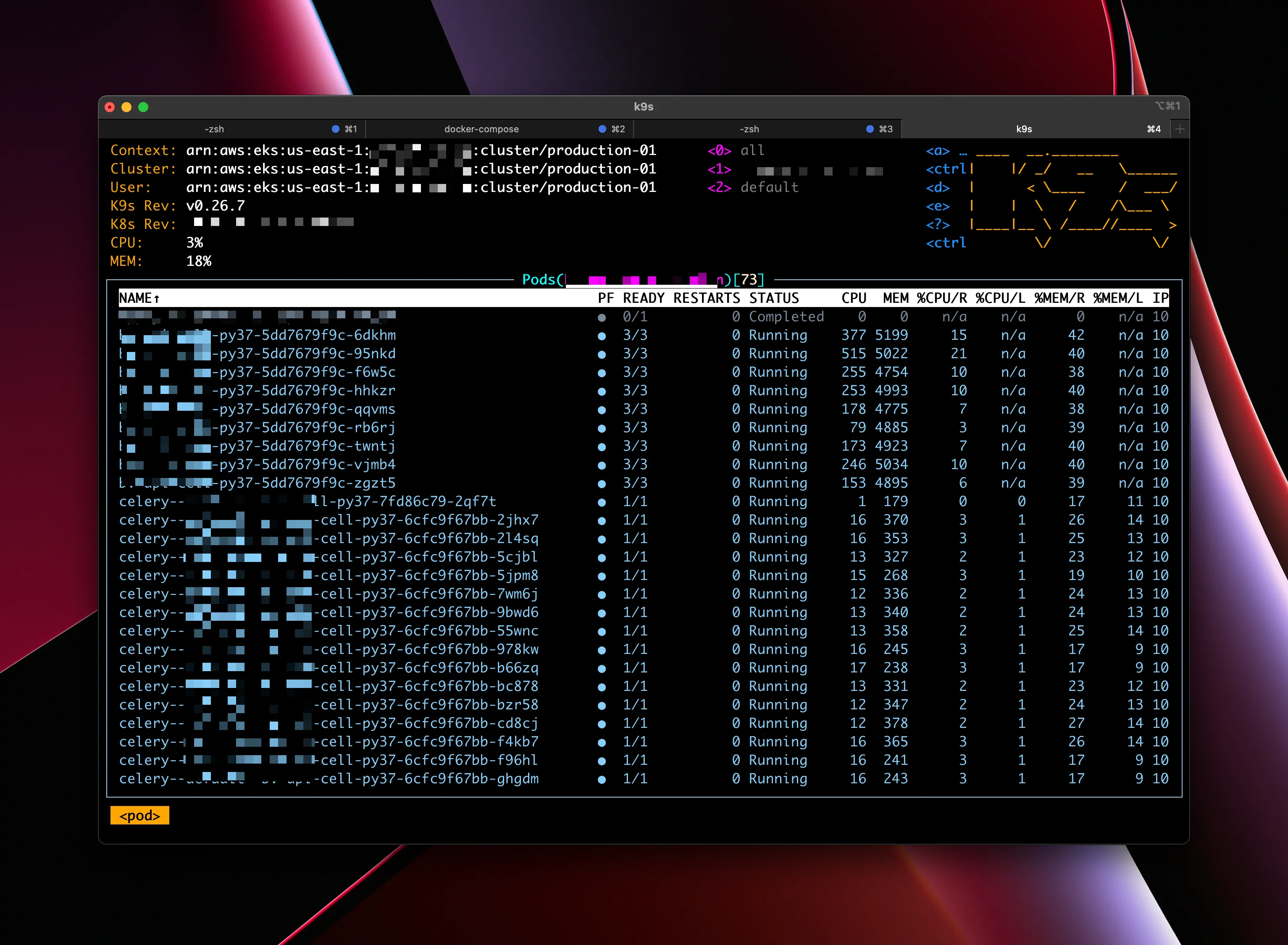

It provides a top like interface to a k8s namespace making it easy to inspect, kill,

view logs, or exec and get a shell into your containers.

Here is what it looks like when it’s working properly:

Some elements redacted for security reasons

The Symptom

During some homebrew updates on my MacBook Pro last week I updated to the latest version of k9s and didn’t think too much about it. I of course, verified it worked with our central REVSYS cluster real quick, but not much else.

So I was a bit surprised today when I launched k9s to see:

It showed the defined contexts and a small error in the upper right-hand corner but not a ton to go on.

Turns out, this is a slight incompatibility between k9s and existing KUBECONFIG that was previously generated by the aws-cli month and months ago for this cluster.

It took me a fair bit of Googling to find the answer, so I’m memorialising it here in case I run into it again. Hopefully, you also find it useful.

The Solution

The solution is easy to execute but not exactly intuitive. And I should note it’s specific to Amazon EKS as I haven’t run into this exact situation with any other managed Kubernetes clusters yet.

First, you need to update your awscli package to at least version 2.8.5. For

OSX users, that’s a quick brew upgrade awscli.

Then you need to delete (or better yet copy to another filename just in case)

your KUBECONFIG, so the awscli generates a new one.

Then you need to run aws eks update-kubeconfig --name <cluster-name> for each

of your clusters.

You can get a list of your clusters by running aws eks list-clusters.

$ aws eks list-clusters

{

"clusters": [

"development-01",

"production-01"

]

}After this, you should be able to run k9s --namespace <namespace> and see a

list of your pods like you would normally.

The Problem

The problem is that deep in your old KUBECONFIG it was defining this:

- name: arn:aws:eks:us-east-1:000000000000:cluster/production-01

user:

exec:

apiVersion: client.authentication.k8s.io/v1alpha1

args:

- --region

- us-east-1

- eks

- get-token

- --cluster-name

- production-01

command: aws

env: nullWhen it needed to be:

- name: arn:aws:eks:us-east-1:000000000000:cluster/production-01

user:

exec:

apiVersion: client.authentication.k8s.io/v1beta1

args:

- --region

- us-east-1

- eks

- get-token

- --cluster-name

- production-01

command: aws

env: null

provideClusterInfo: falseCan you spot the difference? Yeah, it took me a second as well. The

difference is the apiVersion now needs to be client.authentication.k8s.io/v1beta1

instead of v1alpha1.

One of the reasons this is hard to diagnose is that the entire time your

kubectl will continue to work perfectly, so it is very easy to assume the

problem has nothing to do with your KUBECONFIG. Besides, it’s been working

for months and months!

If you have also run into this k9s issue with AWS EKS and an older KUBECONFIG

file only showing you a list of the k8s contexts and not the expected list of

Kube pods, I hope this helps solve it for you!

Frank Wiles

Founder of REVSYS, Django Steering Council, PSF Fellow, and former President of the Django Software Foundation .

Expert in building, scaling and maintaining complex web applications. Want to reach out? Contact me here or use the social links below.

Join my newsletter!

Get the occasional email from me when I write something new.

Struggling with architecture decisions or team dynamics? Ask me any tech, business process, or entrepreneurial question, and I'll do my best to help!